AI coding assistants (e.g., Claude Code and other agentic dev tools) don’t just generate code anymore. They act: read files, run commands, pull/push configs, and chain tools/agents.

But most “monitoring” still stops at the surface: the prompt.

What’s missing is the part that matters in agentic workflows: everything that happens between the prompt and the answer.

The Silent Exposure Path

A real example: A developer asks an agent to debug an AWS auth issue. The agent inspects the environment, checks configs, and runs diagnostics—often reading .env and other sources that may contain AK/SK, tokens, API keys.

Even if secrets are never pasted into chat, never shown in the UI, and never committed… they can still be:

- Accessed by the agent runtime

- Pulled into model context

- Exposed beyond the local machine (e.g., to an external AI service)

That’s the silent exposure path: agent-driven, unintentional, invisible.

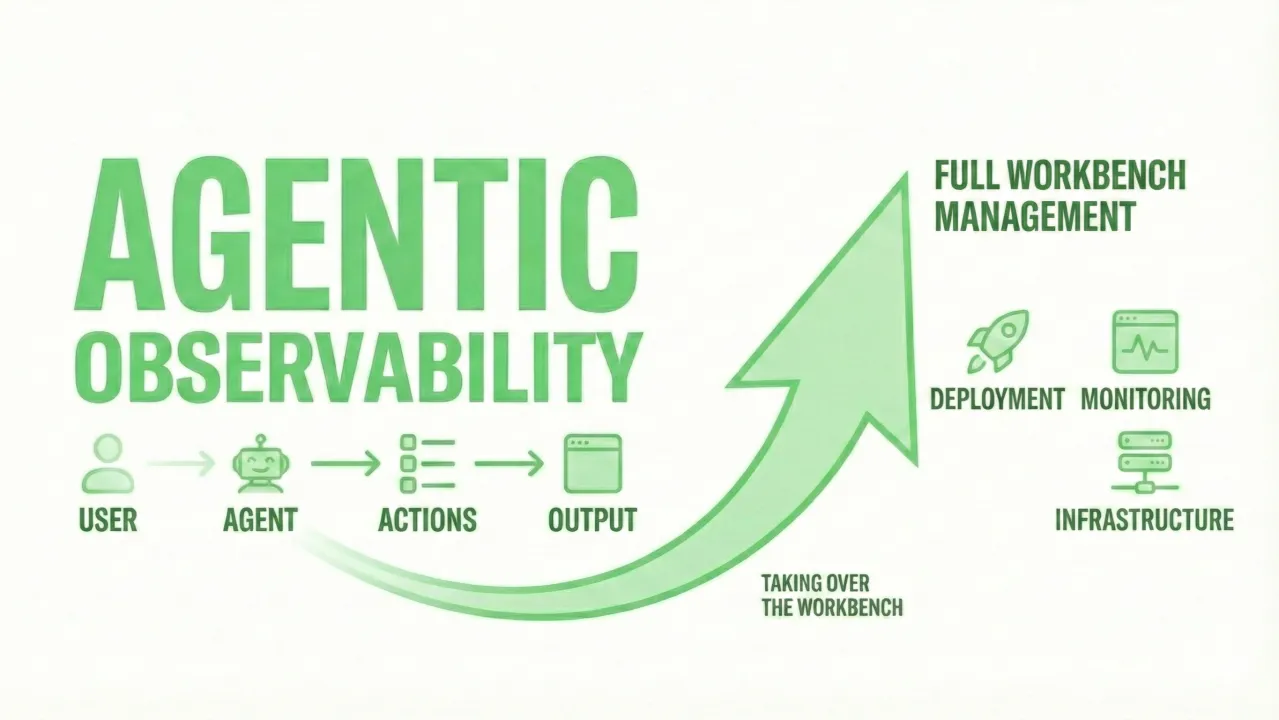

What needs to change: Agentic Observability

From: “What did the user ask?”

To: “What did the AI touch, learn, and transmit while getting the work done?”

At Netra, we focus on:

- Action lineage (user → tool → system timeline)

- Tool-use visibility (commands, files, configs, intermediate outputs)

- Data classification across user/tool/system events

- Agent-to-agent/MCP visibility (where data flows next)

If you’re rolling out AI dev tools: do you see runtime behavior—or only the chat transcript?